Kubernetes Logging Architecture

Application and system logs can help you understand what is happening inside your cluster. The logs are useful for debugging problems and monitoring application and cluster activities.

The easiest and most embraced logging method for containerized applications is to write to the standard output and standard error streams.

However, the default functionality provided by a container engine or runtime is usually not enough for a complete logging solution. For example, if a container crashes, or a pod is evicted, or a node dies, you’ll usually still want to access your application’s logs, hence logs should have a separate storage and lifecycle independent of nodes, pods, or containers.

This concept is called cluster-level-logging. Cluster-level logging requires a separate backend store inside or outside of your cluster.

To understand Cluster-level-logging in kubernetes, you need to understand following.

- Basic logging in Kubernetes

- Logging at the node level

- Cluster-level logging architectures

Basic logging in Kubernetes

First you need to understand the basic logging in Kubernetes that outputs data to the standard output stream. To understand basic logging in kubernetes, I will use a Pod with one container that writes some text to standard output once per second.

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: busybox

args: [/bin/sh, -c,

'i=0; while true; do echo "$i: $(date)"; i=$((i+1)); sleep 1; done']

Use below command to run this pod.

kubectl apply -f https://raw.githubusercontent.com/timesofcloud/k8s/master/debug/counter-pod.yaml

Output

pod/counter created

Use below command to view logs

kubectl logs counter

Output

0: Wed Jul 15 00:00:0 UTC 2020

1: Wed Jul 15 00:00:1 UTC 2020

2: Wed Jul 15 00:00:2 UTC 2020

3: Wed Jul 15 00:00:3 UTC 2020

4: Wed Jul 15 00:00:4 UTC 2020

Access Crashed Container log

You can retrieve log from a previous instantiation of a contain by using -previous flag.

Mutiple container’s log

If your pod has multiple containers, you should specify which container’s logs you want to access by appending a container name (using -c flag) to the command. See the kubectl logs documentation for more details.

Logging at the node level

Every containerized application writes to stdout and sdterr is handled and redirected somewhere by a container engine. For example, the Docker container engine redirects those two streams to a logging driver, which is configured in Kubernetes to write to a file in json format.

Note:The Docker json logging driver treats each line as a separate message. When using the Docker logging driver, there is no direct support for multi-line messages. You need to handle multi-line messages at the logging agent level or higher.

If a container get restarts, the kubelet keeps one terminated container’s logs. But in case Pod is evicted from Node, all corresponding containers are also get evicted along with their logs.

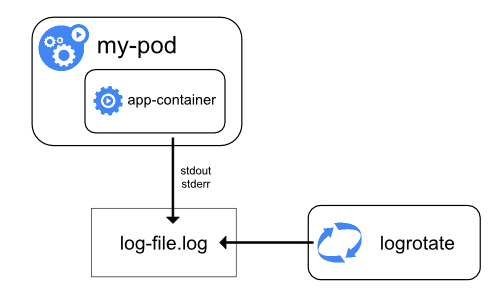

Node-level Log Rotation

Log rotation in Node-level logging is must, otherwise logs will consume all available storage on the node. Kubernetes is not responsible for Log Rotation, but Kubernetes provisioning tool should set up a solution to address that.

For example: Kubernetes cluster provisioned by kube-up.sh script, there is a logrotate tool configured to run each hour.

Cluster-level logging architectures

Kubernetes does not provide native solution for cluster-level logging. But there are several common approaches you can consider for cluster-level logging as below:

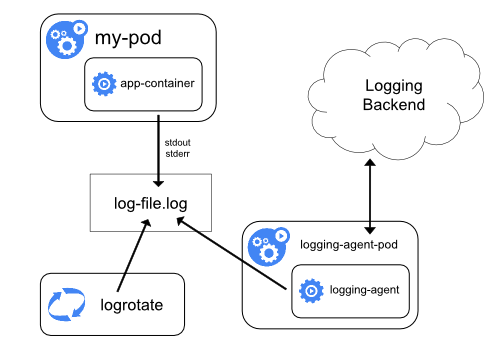

- Node-level logging agent that runs on every node

- A sidecar container for logging in an application pod

- Push logs directly to a backend from within an application

Using a Node Logging Agent:

You can achieve cluster-level logging by including a node-level logging agent on each node. The logging agent tool is responsible for exposing or pushing logs to a backend. Generally, the logging agent is a container that has access to a directory with log files from all of the application containers on that node.

It’s a common practice to run logging agent as DaemonSet replica, a manifest pod, or a dedicated native process on the node.

Node-level logging only works for application’s standard output stdout and standard error sdterr.

There are two optional logging agents are packaged with the Kubernetes release: Stackdriver Logging (used in Google Cloud Platform), and Elasticsearch. Both use fluentd with custom configuration as an agent on the node.

A sidecar container for logging in an application pod:

A sidecar contains can be used as of the following ways:

- Streaming sidecar container: The sidecar container streams application logs to its own stdout. Click here for reading more.

- Sidecar container with a logging agent: The sidecar container runs a logging agent, which is configured to pick-up logs from an application’s container. Click here for reading more.

Exposing logs directly from the application:

Cluster-level logging can be implemented by exposing or pushing logs directly from every application, as of now implementing such a logging mechanism is outside the scope of Kubernetes.

Conclusion: Using a node-level logging agent is the most common and best approach for a kubernetes cluster, because it creates only one agent per node, and it doesn’t require any changes to the applications running in the Node.